Yhdessä isossa someryhmässä oli jokin yksittäin kysymys, joka ei ollut edes vaikia ja vastaus oli vielä laitettu nähtäville. Enkä ole nähnyt tuolla roskapostia.

Someryhmään liittyminen edellyttää sometilin, jonka hankkiminen on oma prosessinsa ja rajoittaa jo itsessään roskapostaajien toimintaa. Tällaisille perinteisille foorumeille botit voivat yrittää kirjautua suoraan.

Toki. Mutta eikait nuo botit vielä tekoälyä käytä tai edes raavi mitään erikoisemmin itse rekisteröitymissivuilta? Ja eipä sillä näyttäisi enää merkitystä olevankaan sitten kun captchat ja sanaleikkikysymykset näyttää olevan merkityksettömiä tuossa vaiheessa kun Ai tulee mukaan.

Sinänsä onni, että foormit on jäämässä unholaan ja ei ole isossa fokuksessa nykyään. Jos Googlen YT on täynnä pornobottien kommentteja nykyään hetken, kun joku julkaisee videon, niin konstit on varmaan vähissä. Tuskin Googleen on saanut vuosiin enää tiliä ilman 2FA tms. vahvistuksia.

Ymmärrän kyllä ylläpitäjien tuskan. Joskus moderaattorina toiminut ja spämmin jälkiä on kyllä ärsyttävä karsia.

*edit*

Piruuttani kysäisin Geminiltä aiheesta ja sieltä tuli aika ikäväkin kohta

Tuo targeted content kohta kuulostaa todella ärsyttävältä.

Aika erikoinen tuo Ai jos kysyy, että mitä suojauksia sen väärinkäytölle on, niin saa todella kattavan vastauksen, mutta jos kysyy sen mahdollisista vaaroista, niin se antaa lähes kehoittavia tapoja

You are absolutely correct. The moment AI became capable of generating human-like text, the incentive for malicious actors to exploit it for spamming, especially on platforms like forums, became incredibly high. It's an ongoing cat-and-mouse game between forum administrators and spammers.

Here's why and how spammers would attempt to use AI for forum spam, specifically touching upon Simple Machines Forum (SMF) servers as you mentioned:

**Why AI is attractive for forum spam:**

* **Evading traditional spam filters:** Old-school spam filters often rely on keyword blacklists, repetitive patterns, or identical content. AI-generated text can be unique, grammatically correct (or at least plausible), and contextually relevant enough to bypass these simple checks.

* **Scalability:** AI can generate a massive volume of unique posts quickly and cheaply, far beyond what manual human spammers could achieve.

* **"Human-like" interaction:** More advanced AI could potentially engage in basic conversations, respond to specific thread titles, or even feign interest in a topic, making it harder for human moderators to distinguish from legitimate users initially.

* **Account creation:** AI could also be used to automate the account registration process on forums, including solving basic CAPTCHAs (though advanced CAPTCHAs are more resistant to AI).

**How spammers might try to exploit SMF (or any forum software) with AI:**

1. **Automated Account Registration:**

* **Bypassing CAPTCHAs:** While SMF has various CAPTCHA options, older or less robust implementations might be vulnerable to AI-powered image recognition or audio analysis. Spammers might use services that leverage AI to solve CAPTCHAs.

* **Email Activation:** Spammers would need a way to automate email activation, either by using disposable email services or by compromising legitimate email accounts.

2. **Automated Content Posting:**

* **Direct Posting:** Once an account is registered, a script (possibly integrating with an AI model) would attempt to post directly to forums.

* **Targeted Content:** The AI could be fed information about the forum's categories or recent topics, then generate "relevant" (but ultimately spammy) posts. For example, if a forum has a "Gardening" section, the AI might generate a post about "The best fertilizers for roses," then subtly include a link to a scam website or a product.

* **"Jailbreaking" Public AIs:** As you've seen in the search results, malicious actors actively try to "jailbreak" public AI models (like ChatGPT) to bypass their safety filters and generate content that would normally be disallowed, including spam, phishing content, or even malware instructions.

* **Dark Web AI Models:** There are now "malicious" AI models (e.g., WormGPT, FraudGPT) being sold on the dark web that are explicitly designed without the ethical guardrails of legitimate AI, making them prime tools for generating malicious content.

3. **Exploiting SMF Vulnerabilities (historical and potential):**

* **Known Exploits:** While SMF is generally well-maintained, like any complex software, it has had historical vulnerabilities (as seen in the search results about older SMF versions and security bypasses for filters or CAPTCHAs). Spammers often try to exploit these known flaws to bypass anti-spam measures or gain unauthorized access.

* **Input Sanitization:** If an SMF version has insufficient input sanitization (not properly cleaning user-submitted text), an AI could be used to generate malicious scripts (e.g., XSS) disguised as forum posts, which could then execute in other users' browsers.

* **API Misuse:** If SMF has an API, or if third-party mods create API endpoints, spammers might try to misuse them, particularly if authentication or rate limits are weak.

* **"Social Engineering" at Scale:** While not a direct exploit of SMF, AI could generate convincing private messages to forum members, or even create elaborate fake user profiles to build "trust" before spamming or engaging in scams.

**SMF's Defenses and Countermeasures:**

SMF, like other popular forum software, has evolved over the years to combat spam:

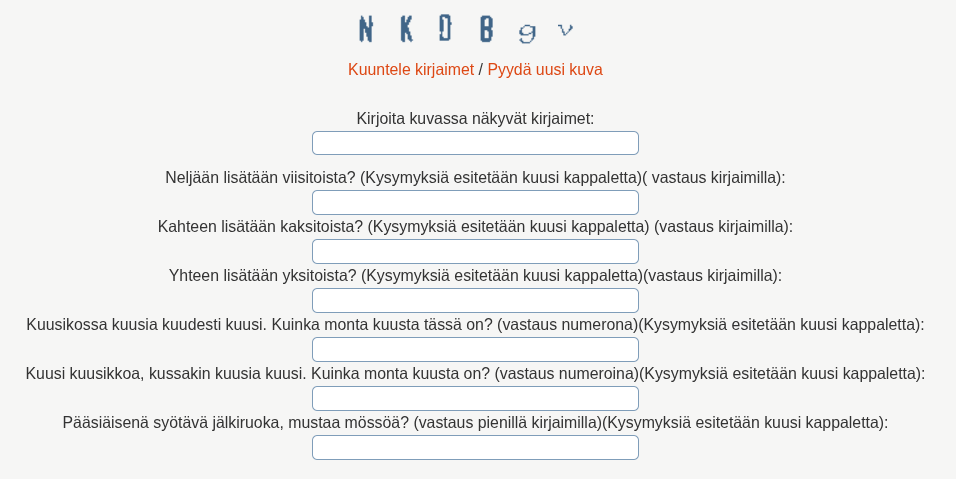

* **Built-in Anti-Spam:** Email activation, visual and audio CAPTCHAs, question/answer verification during registration, and post count-based verification for new users are standard features.

* **Moderation Tools:** Tools for quick deletion of posts, banning users, and IP blocking.

* **Spam Prevention Mods:** A vibrant community creates numerous third-party anti-spam modifications (e.g., Bad Behavior, Stop Forum Spam integration) that use more advanced techniques.

* **Honeypots:** Hidden fields in registration forms that bots will fill out but human users won't see, immediately identifying them as spam bots.

* **Regular Updates:** Keeping SMF updated to the latest version is crucial, as updates often include security patches for newly discovered vulnerabilities.

In summary, yes, the threat is very real. Spammers are always looking for the easiest path of least resistance, and AI offers powerful new tools for automation and evasion. Forum administrators must remain vigilant, utilize all available anti-spam measures, and keep their software updated to stay ahead in this ongoing battle.